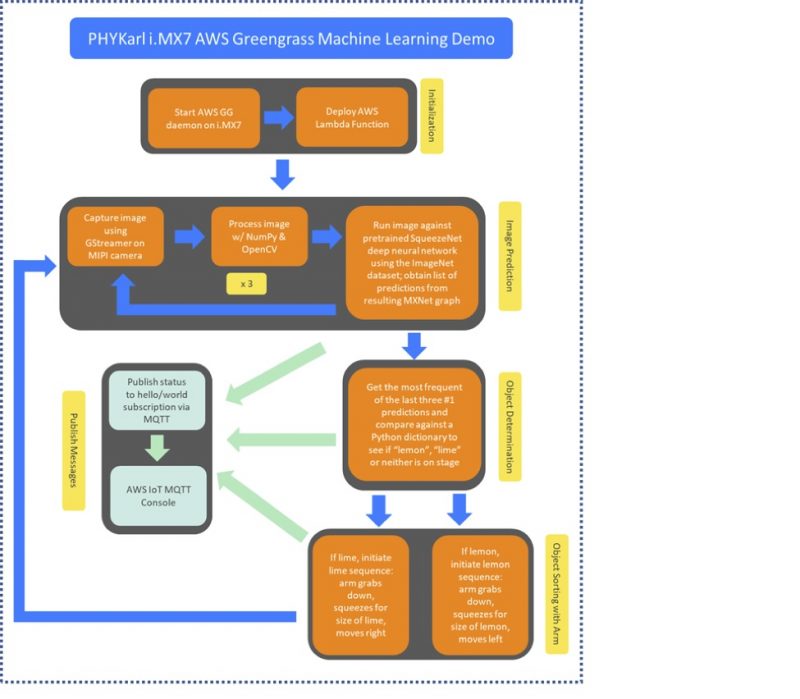

About the demo

The Machine Learning at the Edge demo built by PHYTEC showcases a practical Object Classification implementation of Amazon Greengrass, Amazon Machine Learning, ApacheMXnet, and ImageNet.

The demo includes a PHYTEC phyBOARD-i.MX 7 Single Board Computer which uses a phyCORE-i.MX 7 System on Module as the central processing module, a basic 6-axis robotic arm, a small MIPI CSI-2 camera, and a basic motor controller Raspberry PI HAT for controlling the motors.

The phyCORE-i.MX 7 System on Module is running Linux and an OpenEmbedded distribution that has been built with Yocto. Greengrass and other dependencies are built into the image and PHYTEC has pre-loaded its Greengrass certificates onto the file system. Once the system has booted it connects to the internet via ethernet (WiFi could be used instead) and the device can then be controlled via the Amazon AWS IoT console.

PHYTEC created a custom classification Lambda Function that utilizes the camera, ApacheMXnet, ImageNet, and Python libraries for controlling the robot arm that will place a detected lime or lemon into the appropriate container. This Lambda function can be updated, improved upon, and pushed out to any Greengrass Core device in the users AWS IoT console and Greengrass groupings.

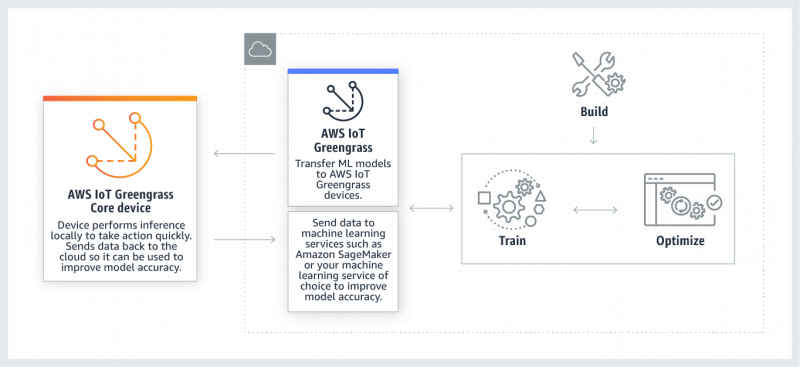

The intent of the demonstration is to show that Amazon’s Greengrass and Machine Learning frameworks can be utilized on low-power and low-cost edge devices to perform autonomous tasks without the latency of relying on cloud-based computation to make decisions; decisions are made in real-time at the edge. In addition, these ‘programs’ at the edge can be updated with smarter models using the Amazon AWS IoT Console. Combining this update mechanism with new technologies such as Amazon SageMaker allows edge-based decision making while allowing for simple updating of better algorithms; the devices on the edge are no longer isolated hardware systems. These edge systems can now be software defined and can be updated with ever evolving models and research.

*graphic from https://aws.amazon.com/greengrass/ml/

Details

PHYTEC phyBOARD-i.MX 7 and phyCORE-i.MX 7

The actual Edge device is the PHYTEC phyBOARD-i.MX 7 single board computer (SBC). This SBC utilizes a PHYTEC phyCORE-i.MX 7 module that features the NXP i.MX 7 processor. This device runs Linux and includes support for the Greengrass framework as well as ApacheMXnet for running various inference models. The phyBOARD-i.MX 7 is also controlling the robotic arm via an off-the-shelf Raspberry PI motor controller HAT. PHYTEC has included a Raspberry Pi HAT connector on its development kit for ease of prototyping. You can see all of the connections from the phyBOARD-i.MX 7 SBC to the motors, ethernet, and camera sensor. The camera sensor is a small and very inexpensive MIPI CSI2 camera. More sophisticated cameras could be used depending on the application.

Greengrass Lambda function and Software

The demo boots up with a pre-deployed lambda function. This Lambda function was previously deployed to the device at PHYTEC’s lab. This Lambda Function is a package of code that includes the Greengrass SDK, licensing, and four Python files:

- Load_model.py: loads the pre-trained image classification model, takes the pictures, processes the pictures, and predicts the object in the photo using the model

- Object_dict.py: a short dictionary that correlates the objects consistently predicted using the model with the actual objects (e.g. “Granny Smith apple” with “lime”)

- Motion_library.py: a small list of functions that define how the robot can move (such as grabbing the lemon or resetting to the initial position)

- GreengrassObjectClassification.py: the core script that runs the demo: makes three predictions using “load_model.py”, sees if the most frequent prediction is in the dictionary, and then uses the motion library to control the robot arm to move the object to the correct location if the object is a lemon or a lime. Publishes the predictions and test results to the MQTT topic “hello/world”.

The last script runs indefinitely until the user stops the daemon on the i.MX7 or forces a deployment reset using the AWS IoT console. The MQTT messages can also be read using the AWS IoT console. See the following flowchart: